WASHINGTON, DC — The speed at which artificial intelligence (AI) is being embraced at the VA has legislators both hopeful and concerned, optimistic that it can improve veterans’ health but worried about data privacy and the possibility of software superseding human decision-making in clinical settings.

“AI, we’re told, promises all,” said Rep. Mariannette Miller-Meeks (R-IA), chair of the House VA Subcommittee on Health at one of two hearings on the topic held earlier this year. “While AI holds great promise, the reality is it’s a new, developing technology, and we’re still figuring out what is possible, practical and ethical.”

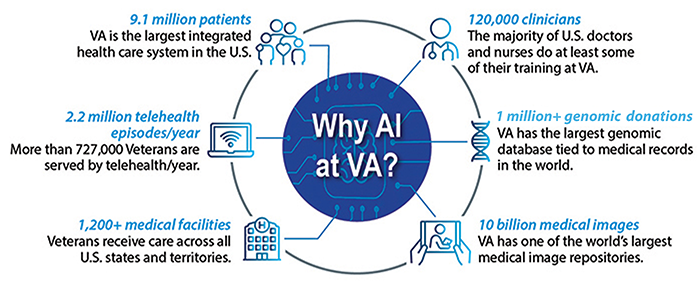

As of last year, VA has more than 100 AI-use cases in its system, with 40 of those in some type of operational phase. Examples range from using computer vision to help with endoscopies to analyzing customer feedback.

VA recently launched the AI Tech Sprint, focusing on addressing staff burnout by streamlining administrative tasks such as clinical note-taking and the processing of paper medical records. VA has allocated nearly $1 million for contract and software costs and plans to offer $1 million in prizes as part of the sprint.

“The department believes that AI represents a generational shift in how our computer systems work and what they will be capable of,” declared VA’s AI Chief Charles Worthington. “If used well, AI has the potential to empower VA employees to provide better healthcare, faster benefits decisions and more secure systems.”

Much like with the rise of smartphones or cloud computing, the department will be forced to adapt, he said.

The failures of recent AI clinical applications have some legislators concerned, however. Miller-Meeks brought up one promising AI tool for the diagnosis of sepsis, which generated alerts for 18% of all hospital patients while missing 67% of actual diagnosed cases of sepsis.

VA Assistant Under Secretary for Health Carolyn Clancy, MD, told legislators that no healthcare system has figured out a measured approach to adopting AI technology in a careful way to “balance benefits while being attentive to risks.”

“[The sepsis technology] is one that suffered from an excess of enthusiasm, which was not to the patients’ benefit,” she said.

VA hopes to avoid a situation like this when it comes to the technology that emerges from their AI Tech Sprint.

“We are very focused on not just the outcome of the tech sprints but some of the other steps we need to take for VA to adopt these in scale,” Worthington explained. “Things around contract approaches, underlying technical infrastructure to support the hosting of these tools … as well as the workforce. There’s work we’re not going to have to do on the AI practitioner side, so we have a workforce that understands how to use these tools. We’re starting to make investments in all of those areas now, so we’re ready to receive promising insights from things like those tech sprints.”

One of the most promising AI uses is its ability to assist in the writing of notes, filing orders, and inputting that data into the medical record.

“Having seen one of these tools demoed live, it was quite amazing,” Clancy said. “We’re going to be testing all of this in our simulation center in Orlando, so people can figure out what the workflows are.”

Rep. Greg Murphy, MD, (R-NC), who trained as a urologist, voiced his concern about what would happen in the event a clinician disagreed with the recommendation of a piece of AI technology.

“There’s going to be massive liability concern in my opinion,” he said. “What if you’re staring at a patient and the AI generator says [you should do this next,] and you’re thinking ‘I don’t think so’ and then, God forbid, if something else should happen, who is liable?”

Clancy assured him that the clinician will always take precedence.

“Many physicians refer to AI as augmented intelligence, not artificial intelligence,” she said. “The human in the loop is important.”

Another concern was the rights of veterans to know and consent to having their medical information fed into an AI model. At a hearing earlier in the month, Rep. Matt Rosendale (R-MT) got VA leaders to agree that the department has a responsibility to notify veterans if such technology was going to be used in their care.

Asked when that informed-consent process was going to be put in place, Rosendale was told that VA does not have a set timeframe.

“You’re putting the cart before the horse,” he declared. “You’re utilizing AI, and you’re not disclosing it to veterans. You’re not given them a choice. That’s dangerous. It truly is. It’s dangerous, and it’s dishonest.”

Rosendale also asked the VA panel if there was any testing that showed analysis performed by AI can be done more accurately than human physicians.

“We do not have that information,” Clancy said. “And I think that’s going to be really important. Women, recently, have been given the opportunity to spend an additional $40 to get an AI-enabled mammogram. Most of the physicians interviewed … said they had no idea if it’s worth the money.”